first add

This commit is contained in:

commit

2bc0bd855e

94

README.md

Normal file

94

README.md

Normal file

@ -0,0 +1,94 @@

|

||||

## 自动部署服务和阿里云OSS的使用

|

||||

|

||||

为简化手工部署项目的操作。目前实现了一套进行自动化部署系统。以下介绍如何使用:

|

||||

|

||||

### 一. 安装和配置客户端:

|

||||

|

||||

- 第一次使用,需在自己电脑安装和配置阿里云oss客户端:

|

||||

- 下载安装阿里云ossBrowser客户端:https://help.aliyun.com/document_detail/209974.htm#task-2065478

|

||||

- 客户端配置信息:

|

||||

```

|

||||

endpoint=oss-cn-hangzhou.aliyuncs.com

|

||||

accessKeyID=${yourAccessKeyID}

|

||||

accessKeySecret=${yourAccessKeySecret}

|

||||

预设OSS路径:oss://ztupload/

|

||||

```

|

||||

上述仅为演示。具体的key和secret, 此文档之外单独提供。上面没提到的,保持为空

|

||||

|

||||

bucket名:**ztupload**, 打开客户端后, 此bucket下目录层级如下:

|

||||

|

||||

```

|

||||

.

|

||||

├── production

|

||||

│ ├── investigate

|

||||

│ │ ├── backEnd

|

||||

│ │ │ └── investigate.jar

|

||||

│ │ └── frontEnd

|

||||

│ │ └── zhuize.zip

|

||||

│ ├── logs

|

||||

│ │ ├── autoDeploy.log

|

||||

│ │ └── ${serviceName}.log

|

||||

│ └── ztsjxxxt

|

||||

│ ├── backEnd

|

||||

│ │ └── ztsjxxxt.jar

|

||||

│ └── frontEnd

|

||||

│ └── html.zip

|

||||

└── stage

|

||||

├── investigate

|

||||

│ ├── backEnd

|

||||

│ │ └── investigate.jar

|

||||

│ └── frontEnd

|

||||

│ └── zhuize.zip

|

||||

├── logs

|

||||

│ ├── autoDeploy.log

|

||||

│ └── ${serviceName}.log

|

||||

└── ztsjxxxt

|

||||

├── backEnd

|

||||

│ └── ztsjxxxt.jar

|

||||

└── frontEnd

|

||||

└── html.zip

|

||||

```

|

||||

|

||||

其中:

|

||||

1. 追责前端包叫zhuize.zip,后端包叫investigate.jar;审计前端包叫html.zip, 后端包叫ztsjxxxt.jar

|

||||

2. production对应中铁环境,stage对应公司内网环境。

|

||||

3. 请保持文件目录结构和文件与上面的目录树完全一致,**不要修改现有目录结构、删除现有文件,或额外上传其他不相关的文件**。

|

||||

4. logs目录下包含:

|

||||

- autoDeploy.log: 自动部署服务执行过程中发生事件的相关log。上传前端zip包,5分钟内,会触发此文件更新。

|

||||

- ${serviceName}.log: 为后端服务相关log, 比如investigate.log。上传后端jar包, 5分钟内,会触发此文件更新。

|

||||

|

||||

### 二. 手动执行部分

|

||||

安装并配置好oss客户端后, 日常发版需执行以下操作

|

||||

|

||||

#### 1. 上传

|

||||

1. 将特定项目的特定构建完成的前端/后端包,上传到阿里云特定的私有存储桶下的特定位置,无需删除原有文件,直接上传同名文件,会自动替换掉原有文件。

|

||||

2. 5分钟后,对应服务会自动使用新包部署完成。

|

||||

|

||||

> 注意!不要执行上传新包覆盖现有包之外的其他动作。如果不小心上传错了位置、删了oss里现有的老包, 不会触发自动部署。但如果修改了目录层级或目录名,**后续正常的自动部署将不会执行!**

|

||||

|

||||

|

||||

#### 2. 查看log (可选操作)

|

||||

以下操作可了解部署详情

|

||||

|

||||

1. 上传完成5分钟后,用ossBrowser查看并刷新 production/${项目名}/${logs} 目录下的内容

|

||||

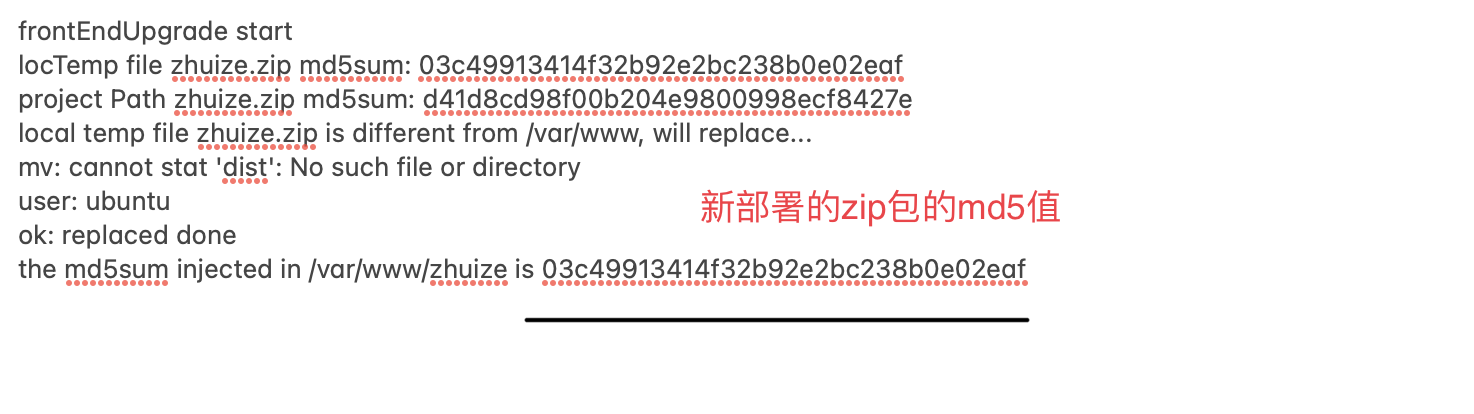

2. 如果上传的是前端zip包,logs目录下的autoDeloy.log的文件创建时间会更新到最新。点击查看log,找到类似如下图的相关信息

|

||||

|

||||

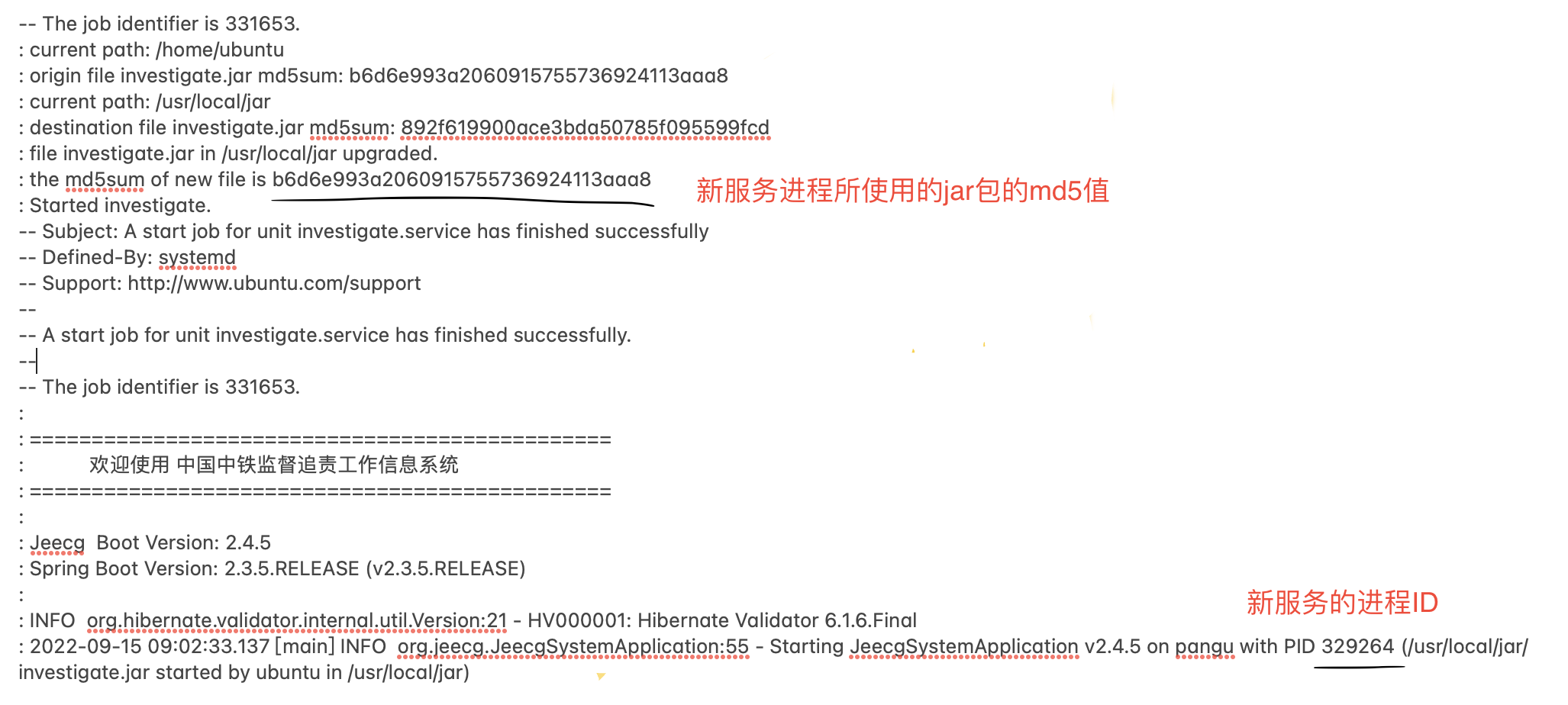

3. 如果上传的是后端jar包,logs目录下的${项目名}.jar的文件创建时间会更新到最新。点击查看log,找到类似如下图的相关信息

|

||||

|

||||

|

||||

#### 3. 校验 (可选操作)

|

||||

|

||||

为确保上传的包和部署成功的包版本一致,可查看本地最新构建好的包的md5值,和log中描述的业务服务中使用的最新jar/zip包的md5值(也就是上图中划线部分的md5值)是否一致, 以便确认。

|

||||

|

||||

|

||||

|

||||

|

||||

|

||||

### 三. 自动执行部分

|

||||

以下部分无需手动执行, 自动完成, 仅作为介绍。

|

||||

|

||||

1. 每台受此系统纳管的虚机,通过5分钟定时任务,去阿里云此存储桶下特定位置,查看特定文件的md5信息,如果发现和本地缓存的包的md5值不一致,就进行下载,替换本地缓存的包。

|

||||

2. 相关服务重启时,先验证本地缓存包是否和当前服务正在使用的包的md5值是否一样,如果不一样,把本地缓存包替换到项目运行目录下,替换正在运行的包。kill掉服务进程,重新开启进程。

|

||||

3. 相关log会上传到oss中

|

||||

|

||||

|

||||

11

aa.sh

Executable file

11

aa.sh

Executable file

@ -0,0 +1,11 @@

|

||||

ct=218

|

||||

san=""

|

||||

for((i=$ct; i>1; i-=3)); do

|

||||

san=""

|

||||

echo $i

|

||||

for ((ii=$ct; ii>-1; ii-=3)); do

|

||||

san=$san"^^^"

|

||||

done

|

||||

echo $san

|

||||

echo $i

|

||||

done

|

||||

67

backEndUpgrade.sh

Executable file

67

backEndUpgrade.sh

Executable file

@ -0,0 +1,67 @@

|

||||

#!/bin/bash

|

||||

fileName=$1

|

||||

projectPath=$2

|

||||

PATH=PATH:/usr/bin:/usr/sbin:/usr/local/bin

|

||||

|

||||

basePath=$3

|

||||

if [ ! -d $basePath ]; then

|

||||

mkdir $basePath -p

|

||||

fi

|

||||

sleep 1

|

||||

cd $basePath

|

||||

echo "current path: $(pwd)"

|

||||

if [ ! -f "$fileName" ];then

|

||||

echo "backEndUpgarde 文件 $fileName 不存在1!"

|

||||

exit 0

|

||||

fi

|

||||

|

||||

oriSum=$(md5sum $fileName)

|

||||

oriSum="${oriSum% *}"

|

||||

echo "origin file $fileName md5sum: $oriSum"

|

||||

|

||||

if [ ! -d "$projectPath" ]; then

|

||||

echo "文件夹 $prjectPath 不存在!"

|

||||

exit 0

|

||||

fi

|

||||

|

||||

cd $projectPath

|

||||

desSum=""

|

||||

if [ ! -f "$fileName" ];then

|

||||

cp $basePath/$fileName .

|

||||

exit 0

|

||||

else

|

||||

desSum=$(md5sum $fileName)

|

||||

desSum="${desSum% *}"

|

||||

echo "current path: $(pwd)"

|

||||

echo "destination file ${fileName} md5sum: ${desSum}"

|

||||

fi

|

||||

|

||||

if [ ${oriSum} = ${desSum} ];then

|

||||

echo "destination file is same with origin file,no need to replace"

|

||||

exit 0

|

||||

fi

|

||||

|

||||

if [ -f "$fileName"_bak2 ];then

|

||||

rm "${fileName}"_bak2

|

||||

fi

|

||||

if [ -f "${fileName}"_bak1 ];then

|

||||

mv ${fileName}_bak1 ${fileName}_bak2

|

||||

fi

|

||||

if [ -f "$fileName"_bak ];then

|

||||

mv ${fileName}_bak ${fileName}_bak1

|

||||

fi

|

||||

if [ -f "$fileName" ];then

|

||||

mv ${fileName} ${fileName}_bak

|

||||

fi

|

||||

|

||||

cp $basePath/"${fileName}" .

|

||||

chgrp nogroup ${fileName}

|

||||

chown nobody ${fileName}

|

||||

|

||||

result=$?

|

||||

if [ $result = 0 ];then

|

||||

echo "file ${fileName} in ${projectPath} upgraded."

|

||||

fi

|

||||

newSum=$(md5sum $fileName)

|

||||

newSum="${newSum% *}"

|

||||

echo "the md5sum of new file is ${newSum} "

|

||||

44

backUpDB.sh

Executable file

44

backUpDB.sh

Executable file

@ -0,0 +1,44 @@

|

||||

#!/bin/bash

|

||||

|

||||

dbUser=$1

|

||||

dbPassword=$2

|

||||

dbHost=$3

|

||||

dbName=$5

|

||||

dbPort=$4

|

||||

backUpPath=$6

|

||||

ossPath=$7

|

||||

hostName=$(echo $HOSTNAME)

|

||||

|

||||

PATH=PATH:/usr/bin:/usr/sbin:/usr/local/bin

|

||||

|

||||

check_port() {

|

||||

nc -z -w5 $dbHost $dbPort

|

||||

}

|

||||

|

||||

cd /tmp

|

||||

rm ${hostName}_${dbName}.sql_bak3.tar.gz -f || true

|

||||

mv ${hostName}_${dbName}.sql_bak2.tar.gz ${hostName}_${dbName}.sql_bak3.tar.gz -f || true

|

||||

mv ${hostName}_${dbName}.sql_bak1.tar.gz ${hostName}_${dbName}.sql_bak2.tar.gz -f || true

|

||||

mv ${hostName}_${dbName}.sql.tar.gz ${hostName}_${dbName}.sql_bak1.tar.gz -f || true

|

||||

|

||||

sed -i 's/lower_case_table_names=1/lower_case_table_names=0/g' /root/mysql/conf/append.cnf

|

||||

if check_port; then

|

||||

echo "端口 $dbPort 是活跃的,一切正常。"

|

||||

else

|

||||

echo "警告:端口 $dbPort 不活跃,请检查数据库连接。"

|

||||

docker restart maria

|

||||

sleep 10

|

||||

fi

|

||||

/usr/bin/mysqldump -R --opt --protocol=TCP --user="${dbUser}" --password="${dbPassword}" --host="${dbHost}" --port=${dbPort} ${dbName} > /tmp/${hostName}_${dbName}.sql

|

||||

sed -i 's/lower_case_table_names=0/lower_case_table_names=1/g' /root/mysql/conf/append.cnf

|

||||

|

||||

|

||||

|

||||

rm sql -fr || true

|

||||

mkdir sql

|

||||

mv ${hostName}_${dbName}.sql sql

|

||||

tar -czvf ${hostName}_${dbName}.sql.tar.gz sql

|

||||

|

||||

cp /tmp/${hostName}_${dbName}.sq*.tar.gz ${backUpPath} -f

|

||||

#echo "ossPath: "${ossPath}

|

||||

#ossutil cp ${dbName}.sql.tar.gz oss://${ossPath}/ -

|

||||

106

backUpIncremental.sh

Executable file

106

backUpIncremental.sh

Executable file

@ -0,0 +1,106 @@

|

||||

#!/bin/bash

|

||||

currentTime=$(date +"%Y%m%d%H")

|

||||

userName=$1

|

||||

password=$2

|

||||

dbName=$3

|

||||

host=$4

|

||||

# 定义备份文件存放目录

|

||||

backUpPath=$5

|

||||

ossPath=$6

|

||||

execPath=""

|

||||

|

||||

if [ -z "$7" ]; then

|

||||

# 如果参数不存在,则使用默认值

|

||||

execPath="docker exec maria /usr/bin/mysqlbinlog"

|

||||

else

|

||||

# 如果参数存在,则使用命令行参数的值

|

||||

execPath="$7"

|

||||

fi

|

||||

|

||||

|

||||

# 获取上次备份的binlog文件名和位置

|

||||

LAST_BACKUP=$(cat "$backUpPath/last_backup.txt")

|

||||

LAST_BINLOG=$(echo "$LAST_BACKUP" | awk '{print $1}')

|

||||

LAST_POSITION=$(echo "$LAST_BACKUP" | awk '{print $2}')

|

||||

|

||||

# 如果是第一次运行,设置初始值

|

||||

|

||||

|

||||

# 获取当前binlog文件名和位置

|

||||

CURRENT_BINLOG=$(mysql -u$userName -p$password -h$host -N -e "SHOW MASTER STATUS" | awk '{print $1}')

|

||||

CURRENT_POSITION=$(mysql -u$userName -p$password -h$host -N -e "SHOW MASTER STATUS" | awk '{print $2}')

|

||||

|

||||

if [[ -z "$LAST_BACKUP" ]]; then

|

||||

LAST_BINLOG="ON.000001"

|

||||

LAST_POSITION="0"

|

||||

fi

|

||||

echo ""

|

||||

|

||||

echo current_binlog $CURRENT_BINLOG

|

||||

echo current_binPostition $CURRENT_POSITION

|

||||

# 备份增量变更到文件

|

||||

# 如果上次备份的binlog文件名和位置为空,则进行全量备份

|

||||

cd $backUpPath

|

||||

#if [ -z "$LAST_BINLOG" ]; then

|

||||

# mysqldump -u $MYSQL_USER -p$MYSQL_PASSWORD -h $MYSQL_HOST $MYSQL_DATABASE > "$BACKUP_DIR/full_backup.sql"

|

||||

#else

|

||||

echo "docker exec maria /usr/bin/mysqlbinlog -u"${userName}" -p"${password}" -h"${host}" --start-position="${LAST_POSITION}" --stop-position="${CURRENT_POSITION}" "$LAST_BINLOG" > "${backUpPath}"/"${dbName}"-"${currentTime}".sql"

|

||||

$execPath -u${userName} -p${password} -h${host} --start-position=${LAST_POSITION} --stop-position=${CURRENT_POSITION} /var/lib/mysql/$LAST_BINLOG > ${backUpPath}/${dbName}-incremental-${LAST_POSITION}-${CURRENT_POSITION}-${currentTime}.sql

|

||||

zip ${backUpPath}/${dbName}-incremental-${LAST_POSITION}-${CURRENT_POSITION}-${currentTime}.sql.zip ${backUpPath}/${dbName}-incremental-${LAST_POSITION}-${CURRENT_POSITION}-${currentTime}.sql

|

||||

rm ${backUpPath}/${dbName}-incremental-${LAST_POSITION}-${CURRENT_POSITION}-${currentTime}.sql

|

||||

ossutil cp ${dbName}-incremental-${LAST_POSITION}-${CURRENT_POSITION}-${currentTime}.sql.zip oss://$ossPath/$file

|

||||

#fi

|

||||

# 更新上次备份的binlog文件名和位置

|

||||

echo "$CURRENT_BINLOG $CURRENT_POSITION" > "last_backup.txt"

|

||||

|

||||

calculate_hour_diff() {

|

||||

# 传入两个时间字符串作为参数

|

||||

local time1=$1

|

||||

local time2=$2

|

||||

|

||||

# 截取时间字符串中的年、月、日和小时部分

|

||||

local year1=${time1:0:4}

|

||||

local month1=${time1:4:2}

|

||||

local day1=${time1:6:2}

|

||||

local hour1=${time1:8:2}

|

||||

|

||||

local year2=${time2:0:4}

|

||||

local month2=${time2:4:2}

|

||||

local day2=${time2:6:2}

|

||||

local hour2=${time2:8:2}

|

||||

|

||||

# 将时间字符串转换为时间戳

|

||||

local timestamp1=$(date -d "$year1-$month1-$day1 $hour1:00:00" +%s)

|

||||

local timestamp2=$(date -d "$year2-$month2-$day2 $hour2:00:00" +%s)

|

||||

|

||||

# 计算两个时间戳的差值,单位为小时

|

||||

local diff_hours=$(( ($timestamp2 - $timestamp1) / 3600 ))

|

||||

|

||||

# 返回计算结果

|

||||

echo $diff_hours

|

||||

}

|

||||

|

||||

|

||||

CURRENT_DATE=$(date +%Y%m%d%H)

|

||||

OLD_BACKUPS=()

|

||||

for file in ${dbName}-incremental-*.sql.zip; do

|

||||

file_date=$(echo "$file" | grep -oP "\d{10}(?=.sql.zip)")

|

||||

if [ ${#file_date} -le 1 ]; then

|

||||

continue

|

||||

fi

|

||||

echo $file_date

|

||||

difft=$(calculate_hour_diff $file_date $CURRENT_DATE )

|

||||

echo difft $difft

|

||||

if [[ difft -gt 24 ]]; then

|

||||

echo $file $CURRENT_DATE $file_date $((CURRENT_DATE - file_date))

|

||||

OLD_BACKUPS+=("$file")

|

||||

fi

|

||||

done

|

||||

|

||||

|

||||

# 遍历数组,打印每个文件名, 超过24小时的增量备份文件要删除

|

||||

for file in "${OLD_BACKUPS[@]}"; do

|

||||

echo "$file"

|

||||

rm $file

|

||||

ossutil rm oss://$ossPath/$file

|

||||

done

|

||||

85

backup.sh

Executable file

85

backup.sh

Executable file

@ -0,0 +1,85 @@

|

||||

#!/bin/bash

|

||||

|

||||

# 获取当前日期和时间

|

||||

current_time=$(date +"%Y%m%d%H")

|

||||

userName=$1

|

||||

password=$2

|

||||

dbName=$3

|

||||

host=$4

|

||||

backUpPath=$5

|

||||

ossPath=$6

|

||||

|

||||

# 备份文件名

|

||||

backup_file="${dbName}_backUp_${current_time}.sql"

|

||||

|

||||

echo "backup_file: "${backup_file}

|

||||

mkdir ${backUpPath} -p || true

|

||||

# 备份MySQL数据库

|

||||

mysqldump --column-statistics=0 -R --opt -u${userName} -p"${password}" -h${host} ${dbName} > ${backUpPath}/"$backup_file"

|

||||

cd ${backUpPath}

|

||||

rm sql -rf || true

|

||||

mkdir sql || true

|

||||

mv ${backup_file} sql/

|

||||

tar -czvf ${backup_file}".tar.gz" "sql/"

|

||||

ossutil cp ${backup_file}".tar.gz" oss://${ossPath}/${backup_file}".tar.gz"

|

||||

rm -fr sql

|

||||

|

||||

calculate_hour_diff() {

|

||||

# 传入两个时间字符串作为参数

|

||||

local time1=$1

|

||||

local time2=$2

|

||||

|

||||

# 截取时间字符串中的年、月、日和小时部分

|

||||

local year1=${time1:0:4}

|

||||

local month1=${time1:4:2}

|

||||

local day1=${time1:6:2}

|

||||

local hour1=${time1:8:2}

|

||||

|

||||

local year2=${time2:0:4}

|

||||

local month2=${time2:4:2}

|

||||

local day2=${time2:6:2}

|

||||

local hour2=${time2:8:2}

|

||||

|

||||

# 将时间字符串转换为时间戳

|

||||

local timestamp1=$(date -d "$year1-$month1-$day1 $hour1:00:00" +%s)

|

||||

local timestamp2=$(date -d "$year2-$month2-$day2 $hour2:00:00" +%s)

|

||||

|

||||

# 计算两个时间戳的差值,单位为小时

|

||||

local diff_hours=$(( ($timestamp2 - $timestamp1) / 3600 ))

|

||||

|

||||

# 返回计算结果

|

||||

echo $diff_hours

|

||||

}

|

||||

# 存储60个小时前的备份文件名的数组

|

||||

old_backup_files=()

|

||||

|

||||

cd ${backUpPath}

|

||||

# 遍168个小时前的备份文件,并将文件名存入数组

|

||||

CURRENT_DATE=$(date +%Y%m%d%H)

|

||||

for file in ${dbName}--*.sql.tar.gz; do

|

||||

file_date=$(echo "$file" | grep -oP "\d{10}(?=.sql.tar.gz)")

|

||||

if [ ${#file_date} -le 1 ]; then

|

||||

continue

|

||||

fi

|

||||

echo $file_date

|

||||

difft=$(calculate_hour_diff $file_date $CURRENT_DATE )

|

||||

echo difft $difft

|

||||

if [[ difft -gt 168 ]]; then

|

||||

echo $file $CURRENT_DATE $file_date $((CURRENT_DATE - file_date))

|

||||

old_bacup_files+=("$file")

|

||||

fi

|

||||

done

|

||||

|

||||

# 遍历数组,删除备份文件

|

||||

for file in "${old_backup_files[@]}"; do

|

||||

rm "$file" || true

|

||||

ossutil rm $file oss://${ossPath}/$file -f || true

|

||||

done

|

||||

|

||||

#删除10天前的备份

|

||||

#delete_date=$(date -d "10 days ago" +%Y-%m-%d)

|

||||

#delete_file="${dbName}_backup_${delete_date}.sql.tar.gz"

|

||||

#rm ${backUpPath}/"$delete_file"

|

||||

|

||||

# 输出日志

|

||||

echo "[$current_date $current_time] 执行${dbName}数据库备份并删除10天前备份成功"

|

||||

36

checkPort.sh

Executable file

36

checkPort.sh

Executable file

@ -0,0 +1,36 @@

|

||||

#!/bin/bash

|

||||

|

||||

function checkPort() {

|

||||

ip_address=$1

|

||||

port=$2

|

||||

|

||||

# 使用 telnet 检测连接,捕获输出

|

||||

output=$(timeout 3 telnet $ip_address $port 2>&1)

|

||||

|

||||

# 输出捕获的内容(仅用于调试,可以删除)

|

||||

echo "Telnet Output: $output"

|

||||

|

||||

# 根据输出内容判断是否连接成功

|

||||

if echo "$output" | grep -q -E "Unable to connect|Connection closed by"; then

|

||||

# 如果输出中包含 "Unable to connect" 或者 "Connection closed by",返回 0 表示连接失败

|

||||

return 0

|

||||

else

|

||||

# 否则返回 1 表示连接成功

|

||||

return 1

|

||||

fi

|

||||

}

|

||||

|

||||

# 调用 checkPort 函数,判断端口是否活跃

|

||||

checkPort $1 $2

|

||||

res=$?

|

||||

|

||||

# 根据端口状态输出结果

|

||||

if [ "$res" -eq 1 ]; then

|

||||

echo "service on $1 $2 worked normally"

|

||||

else

|

||||

echo "service port: $2 is not active, try to restart it"

|

||||

eval $3 # 执行重启服务动作

|

||||

fi

|

||||

|

||||

exit

|

||||

|

||||

125

checkService.sh

Executable file

125

checkService.sh

Executable file

@ -0,0 +1,125 @@

|

||||

#!/bin/bash

|

||||

## Usage

|

||||

# 如果虚机上的shell里面不能执行sudu,这个脚本就能派上用场,在root用户下执行此脚本,也就绕过sudo限制了

|

||||

## ./checkService.sh ztsjxxxt.jar "/usr/local/jar" ztsjxxxt "/home/ubuntu" "production/investigate/backEnd"

|

||||

|

||||

|

||||

fileName=$1

|

||||

projectPath=$2

|

||||

serviceName=$3

|

||||

workPath=$4

|

||||

ossLogPath=$5

|

||||

preName="${fileName%.*}"

|

||||

|

||||

protal=oss

|

||||

|

||||

PATH=PATH:/usr/bin:/usr/sbin:/usr/local/bin

|

||||

|

||||

if [ ! -d $workPath ]; then

|

||||

echo workPath $workPath not exist!

|

||||

exit 1

|

||||

fi

|

||||

|

||||

|

||||

function uploadLog()

|

||||

{

|

||||

ctype=$1

|

||||

identify=$2

|

||||

count=$3

|

||||

echo "current user is ${USER}"

|

||||

echo "current log is " ${workPath}/${identify}.log

|

||||

journalctl -n $count -xe -${ctype} $identify | grep -wv " at " > ${workPath}/${identify}.log

|

||||

ossutil cp -f ${workPath}/${identify}.log ${protol}://${ossLogPath}/logs/${identify}.log

|

||||

if [ $? -eq 0 ]; then

|

||||

echo "ok: log ${identify} uploaded to oss!"

|

||||

return 0

|

||||

else

|

||||

echo "error: upload log ${identify} to oss failed!"

|

||||

return 1

|

||||

fi

|

||||

}

|

||||

|

||||

function compare(){

|

||||

cd $workPath

|

||||

echo "current path: $(pwd)"

|

||||

if [ ! -f "$fileName" ];then

|

||||

echo "checkService 文件 $fileName 不存在1!"

|

||||

return 1

|

||||

fi

|

||||

|

||||

oriSum=$(md5sum $fileName)

|

||||

oriSum="${oriSum% *}"

|

||||

echo "origin local temp file $fileName md5sum: |${oriSum}|"

|

||||

|

||||

if [ ! -d "$projectPath" ]; then

|

||||

echo "文件夹 $projectPath 不存在!"

|

||||

return -1

|

||||

fi

|

||||

|

||||

cd $projectPath

|

||||

desSum=""

|

||||

if [ ! -f "$fileName" ];then

|

||||

cp $workPath/$fileName .

|

||||

return 0

|

||||

else

|

||||

desSum=$(md5sum $fileName)

|

||||

desSum="${desSum% *}"

|

||||

echo "current path: $(pwd)"

|

||||

echo "locfile in $projectPath ${fileName} md5sum: |${desSum}|"

|

||||

fi

|

||||

|

||||

if [ ${oriSum} = ${desSum} ];then

|

||||

#journalctl -n 1000 -xe -t autoDeploy > ${workPath}/autoDeploy.log

|

||||

return 0

|

||||

else

|

||||

echo $oriSum > ${workPath}/${fileName}_md5.txt

|

||||

return 1

|

||||

fi

|

||||

}

|

||||

|

||||

function restart(){

|

||||

sleep 4

|

||||

systemctl restart $serviceName

|

||||

if [ $? -ne 0 ]; then

|

||||

echo "error: systemctl restart ${serviceName} failed!"

|

||||

sleep 3

|

||||

uploadLog t "autoDeploy" 100

|

||||

exit 0

|

||||

fi

|

||||

echo "ok: systemctl restart ${serviceName} success!"

|

||||

ossInfoTempPath=${workPath}/${fileName}_oss.info

|

||||

ossInfoProjectPath=${projectPath}/${fileName}_oss.info

|

||||

echo ossInfoTempPath: $ossInfoTempPath

|

||||

echo ossInfoProjectPath: $ossInfoProjectPath

|

||||

rm -f ${ossInfoProjectPath}

|

||||

cp ${ossInfoTempPath} ${projectPath}

|

||||

cp ${workPath}/${fileName}_md5.txt ${projectPath}

|

||||

|

||||

}

|

||||

|

||||

function main(){

|

||||

echo " "

|

||||

echo "start checkService service"

|

||||

compare

|

||||

result=$?

|

||||

echo "result $result"

|

||||

if [ $result = 0 ];then

|

||||

# uploadLog

|

||||

echo "local temp file ${fileName} is same with file in ${projectPath} ,no need to replace"

|

||||

echo "ok: checkService done"

|

||||

echo " "

|

||||

exit 0

|

||||

elif [ $result = 1 ]; then

|

||||

echo "local temp file $fileName is different from ${projectPath}, will replace..."

|

||||

else

|

||||

exit 0

|

||||

fi

|

||||

restart

|

||||

sleep 45

|

||||

uploadLog u $serviceName 500

|

||||

echo "ok: checkService done"

|

||||

echo " "

|

||||

exit 0

|

||||

}

|

||||

|

||||

main

|

||||

111

dbUpgrade.sh

Executable file

111

dbUpgrade.sh

Executable file

@ -0,0 +1,111 @@

|

||||

#!/bin/bash

|

||||

|

||||

#dbUpgrade.sh dbUpdate.sql "192.168.96.36" "root" "xtph9638642" "3306" "/tmp/autoDeploy" "ztupload/stage/sql"

|

||||

|

||||

sqlFile=$1

|

||||

dbHost=$2

|

||||

dbUser=$3

|

||||

dbPassword=$4

|

||||

dbPort=$5

|

||||

tempPath=$6

|

||||

ossPath=$7

|

||||

|

||||

projectName="sql"

|

||||

projectPath=${tempPath}/${projectName}

|

||||

|

||||

filename_no_extension="${sqlfile%.sql}"

|

||||

dbName="${filename_no_extension:0:3}"

|

||||

|

||||

protal=oss

|

||||

|

||||

function init(){

|

||||

cd $tempPath

|

||||

if [ ! -d $projectName ]; then

|

||||

mkdir $projectName -p

|

||||

fi

|

||||

}

|

||||

|

||||

function compareMd5(){

|

||||

cd $tempPath

|

||||

oriSum=$(md5sum $sqlFile)

|

||||

oriSum="${oriSum% *}"

|

||||

desSum=""

|

||||

echo "origin file $sqlFile md5sum: $oriSum"

|

||||

|

||||

cd $projectPath

|

||||

if [ ! -f "$sqlFile" ];then

|

||||

cp $tempPath/$sqlFile .

|

||||

return 1

|

||||

else

|

||||

desSum=$(md5sum $sqlFile)

|

||||

desSum="${desSum% *}"

|

||||

echo "current path: $(pwd)"

|

||||

echo "destination file ${sqlFile} md5sum: ${desSum}"

|

||||

fi

|

||||

if [ ${oriSum} = ${desSum} ];then

|

||||

return 0

|

||||

else

|

||||

return 1

|

||||

fi

|

||||

}

|

||||

|

||||

function replaceAndExec(){

|

||||

cd $projectPath

|

||||

rm $sqlFile

|

||||

rm sqlOut.log

|

||||

cp $tempPath/$sqlFile .

|

||||

# 获取当前日期并格式化为YYYY-MM-DD的形式

|

||||

current_date=$(date +'%Y-%m-%d')

|

||||

# 获取当前时间并格式化为HH:MM:SS的形式

|

||||

current_time=$(date +'%H:%M:%S')

|

||||

fileName="abc_${current_date}-${current_time}.sql"

|

||||

/usr/bin/mysqldump -u${dbUser} -p${dbPassword} -P${dbPort} $dbname > $fileName

|

||||

/usr/bin/mysql -u${dbUser} -p${dbPassword} -P${dbPort} -e "$(cat ${sqlFile})" | tee sqlOut.log

|

||||

ossutil cp -f sqlOut.log ${protol}://${ossPath}/${dbHost}/sqlOut.log

|

||||

}

|

||||

function uploadLog()

|

||||

{

|

||||

ctype=$1

|

||||

identify=$2

|

||||

count=$3

|

||||

echo "current user is ${USER}"

|

||||

journalctl -n $count -xe -${ctype} $identify > ${tempPath}/${identify}.log

|

||||

ossutil cp -f ${tempPath}/${identify}.log ${protol}://${ossPath}/${dbHost}/${identify}.log

|

||||

if [ $? -eq 0 ]; then

|

||||

echo "ok: log ${identify} uploaded to oss!"

|

||||

return 0

|

||||

else

|

||||

echo "error: upload log ${identify} to oss failed!"

|

||||

return 1

|

||||

fi

|

||||

}

|

||||

|

||||

function main() {

|

||||

echo " "

|

||||

sleep 5

|

||||

echo "dbUpgrade start"

|

||||

init

|

||||

compareMd5

|

||||

result=$?

|

||||

if [ ${result} = 0 ];then

|

||||

echo "local temp file ${sqlFile} is same with ${projectPath},no need to replace"

|

||||

echo "ok: dbUpgrade done"

|

||||

echo " "

|

||||

exit 0

|

||||

else

|

||||

echo "local temp file $sqlFile is different from ${projectPath}, will replace and execusing..."

|

||||

fi

|

||||

replaceAndExec

|

||||

result=$?

|

||||

if [ $result = 0 ];then

|

||||

echo "ok: replace and execuse done"

|

||||

else

|

||||

echo "error: replace and execuse faild"

|

||||

exit 0

|

||||

fi

|

||||

sleep 3

|

||||

uploadLog t autoDeploy 100

|

||||

sleep 1

|

||||

}

|

||||

|

||||

main

|

||||

0

dm8Import.sh

Normal file

0

dm8Import.sh

Normal file

45

dumpRedis.sh

Executable file

45

dumpRedis.sh

Executable file

@ -0,0 +1,45 @@

|

||||

#!/bin/bash

|

||||

|

||||

# 备份目录

|

||||

BACKUP_DIR="/tmp/redisDumps"

|

||||

# Redis持久化文件路径(根据你的Redis配置文件进行调整)

|

||||

# 解析命令行参数

|

||||

while [[ "$#" -gt 0 ]]; do

|

||||

case $1 in

|

||||

--dumpFile) REDIS_DUMP_FILE="$2"; shift ;;

|

||||

*) echo "Unknown parameter passed: $1"; exit 1 ;;

|

||||

esac

|

||||

shift

|

||||

done

|

||||

|

||||

# 创建备份目录(如果不存在)

|

||||

mkdir -p $BACKUP_DIR

|

||||

|

||||

# 执行Redis的BGSAVE命令来创建快照

|

||||

redis-cli BGSAVE

|

||||

|

||||

# 等待持久化操作完成,可以使用 sleep 来等待几秒钟(视情况而定)

|

||||

sleep 5

|

||||

|

||||

# 检查并重命名现有备份文件

|

||||

if [ -f $BACKUP_DIR/dump2.rdb ]; then

|

||||

echo "Removing existing dump2.rdb"

|

||||

rm -f $BACKUP_DIR/dump2.rdb

|

||||

fi

|

||||

|

||||

if [ -f $BACKUP_DIR/dump1.rdb ]; then

|

||||

echo "Renaming dump1.rdb to dump2.rdb"

|

||||

mv $BACKUP_DIR/dump1.rdb $BACKUP_DIR/dump2.rdb

|

||||

fi

|

||||

|

||||

if [ -f $BACKUP_DIR/dump.rdb ]; then

|

||||

echo "Renaming dump.rdb to dump1.rdb"

|

||||

mv $BACKUP_DIR/dump.rdb $BACKUP_DIR/dump1.rdb

|

||||

fi

|

||||

|

||||

# 复制新的dump.rdb到备份目录

|

||||

echo "Copying new dump.rdb to $BACKUP_DIR"

|

||||

cp $REDIS_DUMP_FILE $BACKUP_DIR/dump.rdb

|

||||

|

||||

echo "Backup completed successfully."

|

||||

|

||||

168

frontEndUpgrade.sh

Executable file

168

frontEndUpgrade.sh

Executable file

@ -0,0 +1,168 @@

|

||||

#!/bin/bash

|

||||

# Usage:

|

||||

# ./frontEndUpgrade.sh html.zip "/etc/nginx" "/home/admin.hq" "production/investigate/frontEnd"

|

||||

# ./frontEndUpgrade.sh html.zip "/var/www" "/home/ubuntu" "stage/investigate/frontEnd"

|

||||

fileName=$1 # html.zip zhuize.zip

|

||||

projectPath=$2 # /etc/nginx /var/www

|

||||

workPath=$3

|

||||

ossLogPath=$4

|

||||

user=$USER

|

||||

|

||||

protal=oss

|

||||

basePath=/var/tmp/autoDeploy

|

||||

|

||||

if [ -n $5 ]; then

|

||||

user=$5

|

||||

fi

|

||||

|

||||

if [ ! -d $basePath ]; then

|

||||

mkdir $basePath -p

|

||||

fi

|

||||

|

||||

if [ ! -d $workPath ]; then

|

||||

echo workPath $workPath not exist!

|

||||

# exit 1

|

||||

fi

|

||||

|

||||

PATH=PATH:/usr/bin:/usr/sbin:/usr/local/bin

|

||||

projectName="${fileName%.*}" # html, zhuize

|

||||

preName="${fileName%.*}"

|

||||

!

|

||||

function uploadLog()

|

||||

{

|

||||

ctype=$1

|

||||

identify=$2

|

||||

count=$3

|

||||

echo "current user is ${USER}"

|

||||

journalctl -n $count -xe -${ctype} $identify > ${workPath}/${identify}.log

|

||||

ossutil cp -f ${workPath}/${identify}.log ${protol}://${ossLogPath}/logs/${identify}.log

|

||||

if [ $? -eq 0 ]; then

|

||||

echo "ok: log ${identify} uploaded to oss!"

|

||||

return 0

|

||||

else

|

||||

echo "error: upload log ${identify} to oss failed!"

|

||||

return 1

|

||||

fi

|

||||

}

|

||||

|

||||

|

||||

function extractHere() {

|

||||

cd $projectPath

|

||||

rm $fileName -f | true

|

||||

rm $projectName -rf | true

|

||||

cp $workPath/$fileName .

|

||||

unzip -oq $fileName

|

||||

mv dist $projectName

|

||||

chgrp $user $fileName

|

||||

chown $user $fileName

|

||||

echo "user: $user"

|

||||

chgrp $user $projectName -R

|

||||

chown $user $projectName -R

|

||||

cp ${workPath}/${fileName}_oss.info $projectName

|

||||

sleep 5

|

||||

md5Sum=$(md5sum $fileName)

|

||||

md5Sum="${md5Sum% *}"

|

||||

echo ${md5Sum} > ${projectName}/${fileName}_md5.txt

|

||||

}

|

||||

|

||||

function init(){

|

||||

cd $workPath

|

||||

if [ ! -f "$fileName" ];then

|

||||

echo "file $fileName not exist in $workPath !"

|

||||

# cd $projectPath

|

||||

# mkdir dist

|

||||

# echo "helo" > helo.txt

|

||||

# mv helo.txt dist

|

||||

# zip -r $fileName dist

|

||||

exit 0

|

||||

fi

|

||||

cd $workPath

|

||||

if [ ! -d "$projectPath" ]; then

|

||||

echo "path $projectPath exist! "

|

||||

exit 0

|

||||

fi

|

||||

|

||||

rm dist -fr | true

|

||||

rm $projectName -fr | true

|

||||

|

||||

cd $projectPath

|

||||

if [ ! -d "$projectName" ];then

|

||||

extractHere

|

||||

fi

|

||||

if [ ! -f "$fileName" ];then

|

||||

extractHere

|

||||

fi

|

||||

}

|

||||

|

||||

function compareMd5(){

|

||||

cd $workPath

|

||||

oriSum=$(md5sum $fileName)

|

||||

oriSum="${oriSum% *}"

|

||||

echo "locTemp file ${fileName} md5sum: ${oriSum}"

|

||||

|

||||

cd $projectPath

|

||||

desSum=$(md5sum $fileName)

|

||||

desSum="${desSum% *}"

|

||||

echo "project Path ${fileName} md5sum: ${desSum}"

|

||||

|

||||

if [ ${oriSum} = ${desSum} ];then

|

||||

return 0

|

||||

else

|

||||

return 1

|

||||

fi

|

||||

}

|

||||

|

||||

function replaceFile(){

|

||||

cd $projectPath

|

||||

if [ -f "$fileName"_bak2 ];then

|

||||

rm "${fileName}"_bak2 -fr

|

||||

fi

|

||||

if [ -f "${fileame}"_bak1 ];then

|

||||

mv ${fileName}_bak1 ${fileName}_bak2

|

||||

fi

|

||||

if [ -f "$fileName"_bak ];then

|

||||

mv ${fileName}_bak ${fileName}_bak1

|

||||

fi

|

||||

if [ -f "$fileName" ];then

|

||||

mv ${fileName} ${fileName}_bak

|

||||

fi

|

||||

extractHere

|

||||

return 0

|

||||

}

|

||||

|

||||

function verify() {

|

||||

cd /tmp/autoDeploy

|

||||

cd $projectPath/${preName}

|

||||

echo "the md5sum injected in ${projectPath}/${preName} is $(cat ${fileName}_md5.txt)"

|

||||

}

|

||||

|

||||

function main() {

|

||||

echo " "

|

||||

sleep 5

|

||||

echo "frontEndUpgrade start"

|

||||

init

|

||||

compareMd5

|

||||

result=$?

|

||||

if [ ${result} = 0 ];then

|

||||

echo "local temp file ${fileName} is same with ${projectPath},no need to replace"

|

||||

echo "ok: frontEndUpgrade done"

|

||||

echo " "

|

||||

exit 0

|

||||

else

|

||||

echo "local temp file $fileName is different from ${projectPath}, will replace..."

|

||||

fi

|

||||

replaceFile

|

||||

result=$?

|

||||

if [ $result = 0 ];then

|

||||

echo "ok: replaced done"

|

||||

else

|

||||

echo "error: replace faild"

|

||||

exit 0

|

||||

fi

|

||||

sleep 3

|

||||

verify

|

||||

echo " "

|

||||

uploadLog t autoDeploy 100

|

||||

}

|

||||

|

||||

main

|

||||

28

nacos.env

Normal file

28

nacos.env

Normal file

@ -0,0 +1,28 @@

|

||||

SHELL=/bin/bash

|

||||

JAVA_HOME=/usr/lib/jvm/java-11-openjdk-amd64/

|

||||

SSH_AUTH_SOCK=/tmp/ssh-U6ap8c5J2x/agent.4132

|

||||

PWD=/home/ubuntu/data/shell/autoDeploy

|

||||

LOGNAME=ubuntu

|

||||

XDG_SESSION_TYPE=tty

|

||||

MOTD_SHOWN=pam

|

||||

HOME=/home/ubuntu

|

||||

LANG=en_US.UTF-8

|

||||

LS_COLORS=rs=0:di=01;34:ln=01;36:mh=00:pi=40;33:so=01;35:do=01;35:bd=40;33;01:cd=40;33;01:or=40;31;01:mi=00:su=37;41:sg=30;43:ca=30;41:tw=30;42:ow=34;42:st=37;44:ex=01;32:*.tar=01;31:*.tgz=01;31:*.arc=01;31:*.arj=01;31:*.taz=01;31:*.lha=01;31:*.lz4=01;31:*.lzh=01;31:*.lzma=01;31:*.tlz=01;31:*.txz=01;31:*.tzo=01;31:*.t7z=01;31:*.zip=01;31:*.z=01;31:*.dz=01;31:*.gz=01;31:*.lrz=01;31:*.lz=01;31:*.lzo=01;31:*.xz=01;31:*.zst=01;31:*.tzst=01;31:*.bz2=01;31:*.bz=01;31:*.tbz=01;31:*.tbz2=01;31:*.tz=01;31:*.deb=01;31:*.rpm=01;31:*.jar=01;31:*.war=01;31:*.ear=01;31:*.sar=01;31:*.rar=01;31:*.alz=01;31:*.ace=01;31:*.zoo=01;31:*.cpio=01;31:*.7z=01;31:*.rz=01;31:*.cab=01;31:*.wim=01;31:*.swm=01;31:*.dwm=01;31:*.esd=01;31:*.jpg=01;35:*.jpeg=01;35:*.mjpg=01;35:*.mjpeg=01;35:*.gif=01;35:*.bmp=01;35:*.pbm=01;35:*.pgm=01;35:*.ppm=01;35:*.tga=01;35:*.xbm=01;35:*.xpm=01;35:*.tif=01;35:*.tiff=01;35:*.png=01;35:*.svg=01;35:*.svgz=01;35:*.mng=01;35:*.pcx=01;35:*.mov=01;35:*.mpg=01;35:*.mpeg=01;35:*.m2v=01;35:*.mkv=01;35:*.webm=01;35:*.ogm=01;35:*.mp4=01;35:*.m4v=01;35:*.mp4v=01;35:*.vob=01;35:*.qt=01;35:*.nuv=01;35:*.wmv=01;35:*.asf=01;35:*.rm=01;35:*.rmvb=01;35:*.flc=01;35:*.avi=01;35:*.fli=01;35:*.flv=01;35:*.gl=01;35:*.dl=01;35:*.xcf=01;35:*.xwd=01;35:*.yuv=01;35:*.cgm=01;35:*.emf=01;35:*.ogv=01;35:*.ogx=01;35:*.aac=00;36:*.au=00;36:*.flac=00;36:*.m4a=00;36:*.mid=00;36:*.midi=00;36:*.mka=00;36:*.mp3=00;36:*.mpc=00;36:*.ogg=00;36:*.ra=00;36:*.wav=00;36:*.oga=00;36:*.opus=00;36:*.spx=00;36:*.xspf=00;36:

|

||||

LC_TERMINAL=iTerm2

|

||||

SSH_CONNECTION=192.168.96.16 60271 192.168.96.39 22

|

||||

LESSCLOSE=/usr/bin/lesspipe %s %s

|

||||

XDG_SESSION_CLASS=user

|

||||

TERM=xterm-256color

|

||||

LESSOPEN=| /usr/bin/lesspipe %s

|

||||

USER=ubuntu

|

||||

LC_TERMINAL_VERSION=3.4.16

|

||||

SHLVL=1

|

||||

XDG_SESSION_ID=19

|

||||

CLASSPATH=.:/usr/lib/jvm/java-11-openjdk-amd64/lib:/lib

|

||||

XDG_RUNTIME_DIR=/run/user/1001

|

||||

SSH_CLIENT=192.168.96.16 60271 22

|

||||

XDG_DATA_DIRS=/usr/local/share:/usr/share:/var/lib/snapd/desktop

|

||||

PATH=/opt/apache-maven-3.8.6/bin:/usr/local/sbin:/usr/local/bin:/usr/sbin:/usr/bin:/sbin:/bin:/usr/games:/usr/local/games:/snap/bin:/usr/local/jar

|

||||

DBUS_SESSION_BUS_ADDRESS=unix:path=/run/user/1001/bus

|

||||

SSH_TTY=/dev/pts/0

|

||||

OLDPWD=/home/ubuntu

|

||||

8

ossDetect.sh

Executable file

8

ossDetect.sh

Executable file

@ -0,0 +1,8 @@

|

||||

#!/bin/bash

|

||||

|

||||

protal=oss

|

||||

cd /tmp/autoDeploy

|

||||

ossutil stat ${protol}://ztupload/html.zip > html_oss.info

|

||||

ossInfo=$(cat html_oss.info)

|

||||

out1=${ossInfo##*Etag}

|

||||

echo $out1

|

||||

85

ossUpgrade.sh

Executable file

85

ossUpgrade.sh

Executable file

@ -0,0 +1,85 @@

|

||||

#!/bin/bash

|

||||

#usage:

|

||||

# ./ossUpgrade.sh "stage/ztsjxxxt/frontEnd/" html.zip ztsjxxxt

|

||||

# set -xt

|

||||

PATH=PATH:/usr/bin:/usr/sbin:/usr/local/bin

|

||||

ossPath=$1 #末尾别忘了加/

|

||||

fileName=$2

|

||||

preName="${fileName%.*}"

|

||||

|

||||

protol=oss

|

||||

|

||||

basePath=$3

|

||||

|

||||

if [ ! -d $basePath ]; then

|

||||

mkdir $basePath -p

|

||||

fi

|

||||

|

||||

function compare(){

|

||||

if [ ! -d $basePath ]; then

|

||||

mkdir $basePath

|

||||

fi

|

||||

cd $basePath

|

||||

if [ ! -f ${fileName}_oss.info ]; then

|

||||

touch ${fileName}_oss.info

|

||||

fi

|

||||

echo upstream ossPath: ${protol}://${ossPath}$fileName

|

||||

( ossutil stat ${protol}://${ossPath}$fileName | head -n -2) > ${fileName}_upstream_oss.info

|

||||

upstreamOssInfoMd5=$(md5sum ${fileName}_upstream_oss.info)

|

||||

upstreamOssInfoMd5="${upstreamOssInfoMd5% *}"

|

||||

locOssInfoMd5=$(md5sum ${fileName}_oss.info)

|

||||

locOssInfoMd5="${locOssInfoMd5% *}"

|

||||

echo "upstream $fileName ossInfo md5: |${upstreamOssInfoMd5}|"

|

||||

echo "localTep $fileName ossInfo md5: |${locOssInfoMd5}|"

|

||||

echo "upstream:" $(pwd)/${fileName}_upstream_oss.info

|

||||

cat ${fileName}_upstream_oss.info

|

||||

echo "localTem:" $(pwd)/${fileName}_oss.info

|

||||

cat ${fileName}_oss.info

|

||||

# 当上游oss文件meta信息跟下游缓存文件meta信息匹配时, 无需更新

|

||||

if [ "${upstreamOssInfoMd5}" = "${locOssInfoMd5}" ];then

|

||||

return 0

|

||||

else

|

||||

# 当上游oss文件meta信息跟下游缓存文件meta信息不符时,如果上游信息是空,下游非空,则忽略更新,因为有可能是误判

|

||||

if [ "${upstreamOssInfoMd5}" = "d41d8cd98f00b204e9800998ecf8427e" ] && [ "${locOssInfoMd5}" != "d41d8cd98f00b204e9800998ecf8427e" ];then

|

||||

echo "maybe a mistake, ignore upgrade"

|

||||

return 0

|

||||

else

|

||||

return 1

|

||||

fi

|

||||

fi

|

||||

}

|

||||

|

||||

function downloadFromOss(){

|

||||

cd $basePath

|

||||

echo "fileName: " $fileName

|

||||

ossutil cp -f ${protol}://${ossPath}${fileName} ${fileName}_tmp

|

||||

rm $fileName

|

||||

mv ${fileName}_tmp $fileName

|

||||

( ossutil stat ${protol}://${ossPath}$fileName | head -n -2) > ${fileName}_oss.info

|

||||

}

|

||||

|

||||

function main(){

|

||||

echo " "

|

||||

echo "start ossUpgrade service"

|

||||

compare

|

||||

result=$?

|

||||

if [ $result = 0 ];then

|

||||

# uploadLog

|

||||

echo "local temp file $fileName is same with oss file,no need to replace"

|

||||

echo "end ossUpgrade service"

|

||||

echo " "

|

||||

exit 0

|

||||

else

|

||||

echo "local temp file $fileName is different from oss, will download..."

|

||||

fi

|

||||

downloadFromOss

|

||||

echo "end ossUpgrade service"

|

||||

echo " "

|

||||

}

|

||||

|

||||

main

|

||||

|

||||

|

||||

|

||||

|

||||

|

||||

35

replacessh.sh

Normal file

35

replacessh.sh

Normal file

@ -0,0 +1,35 @@

|

||||

#!/bin/bash

|

||||

|

||||

yum install -y openssl-devel zlib-devel

|

||||

wget https://www.openssl.org/source/openssl-1.1.1l.tar.gz

|

||||

tar -xzvf openssl-1.1.1l.tar.gz

|

||||

cd openssl-1.1.1l

|

||||

./config --prefix=/usr/local/openssl --openssldir=/usr/local/openssl shared zlib

|

||||

make

|

||||

make install

|

||||

cp -f /root/openssl-1.1.1l/libssl.so.1.1 /usr/lib64/ || true

|

||||

cp -f /root/openssl-1.1.1l/libcrypto.so.1.1 /usr/lib64/ || true

|

||||

|

||||

export PATH=/usr/local/openssl/bin:$PATH

|

||||

|

||||

ldconfig

|

||||

openssl version

|

||||

mv /usr/bin/openssl /usr/bin/openssl_old

|

||||

mv -f /usr/local/openssl/bin/openssl /usr/bin/openssl

|

||||

|

||||

cd ~

|

||||

wget https://cdn.openbsd.org/pub/OpenBSD/OpenSSH/portable/openssh-9.7p1.tar.gz

|

||||

tar -zxvf openssh-9.7p1.tar.gz

|

||||

cd openssh-9.7p1/

|

||||

./configure --prefix=/usr/local/openssh --with-ssl-dir=/usr/local/openssl --without-openssl-header-check

|

||||

|

||||

make

|

||||

make install

|

||||

|

||||

cp -r /etc/ssh /etc/ssh_backup

|

||||

systemctl stop sshd

|

||||

sleep 2

|

||||

cp -f /usr/local/openssh/sbin/sshd /usr/sbin/sshd

|

||||

cp -f /usr/local/openssh/bin/ssh /usr/bin/ssh

|

||||

sleep 2

|

||||

systemctl start sshd

|

||||

32

replacessh2.sh

Normal file

32

replacessh2.sh

Normal file

@ -0,0 +1,32 @@

|

||||

#!/bin/bash

|

||||

|

||||

tar -xzvf openssl-1.1.1l.tar.gz

|

||||

cd openssl-1.1.1l

|

||||

./config --prefix=/usr/local/openssl --openssldir=/usr/local/openssl shared zlib

|

||||

make

|

||||

make install

|

||||

cp -f /root/openssl-1.1.1l/libssl.so.1.1 /usr/lib64/ || true

|

||||

cp -f /root/openssl-1.1.1l/libcrypto.so.1.1 /usr/lib64/ || true

|

||||

|

||||

export PATH=/usr/local/openssl/bin:$PATH

|

||||

|

||||

ldconfig

|

||||

openssl version

|

||||

mv /usr/bin/openssl /usr/bin/openssl_old

|

||||

mv -f /usr/local/openssl/bin/openssl /usr/bin/openssl

|

||||

|

||||

cd ~

|

||||

tar -zxvf openssh-9.7p1.tar.gz

|

||||

cd openssh-9.7p1/

|

||||

./configure --prefix=/usr/local/openssh --with-ssl-dir=/usr/local/openssl --without-openssl-header-check

|

||||

|

||||

make

|

||||

make install

|

||||

|

||||

cp -r /etc/ssh /etc/ssh_backup

|

||||

systemctl stop sshd

|

||||

sleep 2

|

||||

\cp -f /usr/local/openssh/sbin/sshd /usr/sbin/sshd

|

||||

\cp -f /usr/local/openssh/bin/ssh /usr/bin/ssh

|

||||

sleep 2

|

||||

systemctl start sshd

|

||||

30

rsyncBack.sh

Executable file

30

rsyncBack.sh

Executable file

@ -0,0 +1,30 @@

|

||||

#!/bin/bash

|

||||

|

||||

# 源文件夹和目标文件夹路径

|

||||

source_dir=$1

|

||||

target_dir=$2

|

||||

|

||||

mkdir ${target_dir} -p || true

|

||||

|

||||

# 备份文件名和目录

|

||||

backup_date=$(date +%Y-%m-%d)

|

||||

backup_dir="${target_dir}/backup_${backup_date}"

|

||||

backup_file="${backup_dir}.tar.gz"

|

||||

|

||||

# 使用rsync命令进行文件夹备份

|

||||

#rsync -a "$source_dir"

|

||||

rsync --timeout=900 "$source_dir" "$backup_dir" -urdvt

|

||||

|

||||

# 压缩备份目录

|

||||

tar -czf "$backup_file" "$backup_dir"

|

||||

rm ${backup_dir} -fr

|

||||

|

||||

# 删除7天前的备份

|

||||

delete_date=$(date -d "7 days ago" +%Y-%m-%d)

|

||||

delete_dir="${target_dir}/backup_${delete_date}.tar.gz"

|

||||

rm "$delete_dir"

|

||||

|

||||

# 输出日志

|

||||

current_date=$(date +%Y-%m-%d)

|

||||

current_time=$(date +%H:%M:%S)

|

||||

echo "[$current_date $current_time] 备份成功"

|

||||

62

startDataCenter.sh

Executable file

62

startDataCenter.sh

Executable file

@ -0,0 +1,62 @@

|

||||

#!/bin/bash

|

||||

|

||||

PATH=$PATH:/usr/bin/:/usr/local/bin/:/usr/sbin

|

||||

dataCenterPath=$1

|

||||

profile=$2

|

||||

jars=( \

|

||||

data-metadata-collect-fileset\

|

||||

data-metadata-collect\

|

||||

data-metadata-collect\

|

||||

data-metadata-dic\

|

||||

data-metadata-group\

|

||||

data-metadata-mapping\

|

||||

data-metadata-model\

|

||||

data-sources\

|

||||

data-standard\

|

||||

data-metadata-interface\

|

||||

data-asset\

|

||||

data-quality\

|

||||

)

|

||||

|

||||

function startModule()

|

||||

{

|

||||

moduleName=$1

|

||||

|

||||

|

||||

if [ ! -f jars/"${moduleName}.jar" ];then

|

||||

echo "startDtaCenter 文件 jars/${moduleName}.jar 不存在1!"

|

||||

else

|

||||

#${JAVA_HOME}bin/java -Xms256m -Xmx512m -Xss512K -Dspring.profiles.active=${profile} -Dspring.config.location=file:${dataCenterPath}/config/ -jar jars/${moduleName}.jar &

|

||||

/usr/bin/java -Dspring.profiles.active=${profile} -jar jars/${moduleName}.jar &

|

||||

# > logs/${moduleName}.log &

|

||||

echo "${!}" > pids/common/${moduleName}.pid

|

||||

fi

|

||||

sleep 1

|

||||

}

|

||||

|

||||

function checkDir()

|

||||

{

|

||||

directory_path=$1

|

||||

|

||||

if [ ! -d "$directory_path" ]; then

|

||||

echo "目录不存在,正在创建..."

|

||||

mkdir -p "$directory_path"

|

||||

echo "目录创建成功!"

|

||||

else

|

||||

echo "目录已经存在。"

|

||||

fi

|

||||

}

|

||||

|

||||

function main(){

|

||||

cd ${dataCenterPath}

|

||||

checkDir "logs"

|

||||

checkDir "pids"

|

||||

for jar in ${jars[@]}

|

||||

do

|

||||

startModule $jar

|

||||

done

|

||||

}

|

||||

|

||||

main

|

||||

|

||||

|

||||

32

startDataCenterBase.sh

Executable file

32

startDataCenterBase.sh

Executable file

@ -0,0 +1,32 @@

|

||||

#!/bin/bash

|

||||

|

||||

PATH=$PATH:/usr/bin/:/usr/local/bin/:/usr/sbin

|

||||

dataCenterPath=$1

|

||||

profile=$2

|

||||

|

||||

function startModule()

|

||||

{

|

||||

moduleName=$1

|

||||

if [ ! -f jars/"$moduleName".jar ];then

|

||||

echo startDtaCenter 文件 $(pwd)/jars/${moduleName} 不存在1!

|

||||

exit 0

|

||||

fi

|

||||

|

||||

#${JAVA_HOME}bin/java -Xms256m -Xmx512m -Xss512K -Dspring.profiles.active=${profile} -Dspring.config.location=file:${dataCenterPath}/config/ -jar jars/${moduleName}.jar &

|

||||

${JAVA_HOME}/bin/java -Dspring.profiles.active=${profile} -jar jars/${moduleName}.jar &

|

||||

# > logs/${moduleName}.log &

|

||||

echo "${!}" > pids/base/${moduleName}.pid

|

||||

sleep 1

|

||||

}

|

||||

|

||||

function main(){

|

||||

cd ${dataCenterPath}

|

||||

startModule zlt-uaa

|

||||

startModule user-center

|

||||

startModule sc-gateway

|

||||

startModule back-web

|

||||

}

|

||||

|

||||

main

|

||||

|

||||

|

||||

31

startDataCenterBase2.sh

Executable file

31

startDataCenterBase2.sh

Executable file

@ -0,0 +1,31 @@

|

||||

#!/bin/bash

|

||||

|

||||

PATH=$PATH:/usr/bin/:/usr/local/bin/:/usr/sbin

|

||||

dataCenterPath=$1

|

||||

profile=$2

|

||||

|

||||

function startModule()

|

||||

{

|

||||

cd ${dataCenterPath}

|

||||

moduleName=$1

|

||||

if [ ! -f jars/"$moduleName".jar ];then

|

||||

echo startDataCenter 文件 $(pwd)/jars/${moduleName}.jar 不存在1!

|

||||

exit 0

|

||||

fi

|

||||

|

||||

#${JAVA_HOME}bin/java -Xms256m -Xmx512m -Xss512K -Dspring.profiles.active=${profile} -Dspring.config.location=file:${dataCenterPath}/config/ -jar jars/${moduleName}.jar &

|

||||

${JAVA_HOME}/bin/java -Dspring.profiles.active=${profile} -jar jars/${moduleName}.jar &

|

||||

# > logs/${moduleName}.log &

|

||||

echo "${!}" > pids/base/${moduleName}.pid

|

||||

sleep 1

|

||||

}

|

||||

|

||||

function main(){

|

||||

cd ${dataCenterPath}

|

||||

startModule zlt-uaa

|

||||

startModule user-center

|

||||

startModule sc-gateway

|

||||

startModule back-web

|

||||

}

|

||||

|

||||

main

|

||||

27

stopDataCenter.sh

Executable file

27

stopDataCenter.sh

Executable file

@ -0,0 +1,27 @@

|

||||

#!/bin/bash

|

||||

PATH=$PATH:/usr/bin/:/usr/local/bin/:/usr/sbin

|

||||

dataCenterPath=$1

|

||||

|

||||

function killIt()

|

||||

{

|

||||

pid=$1

|

||||

kill -9 ${pid}

|

||||

}

|

||||

|

||||

function fetch()

|

||||

{

|

||||

for file in ${dataCenterPath}/pids/common/*.pid

|

||||

do

|

||||

if [ -f "$file" ]

|

||||

then

|

||||

killIt $(cat $file) | true

|

||||

fi

|

||||

done

|

||||

}

|

||||

|

||||

function main()

|

||||

{

|

||||

fetch

|

||||

}

|

||||

|

||||

main

|

||||

27

stopDataCenterBase.sh

Executable file

27

stopDataCenterBase.sh

Executable file

@ -0,0 +1,27 @@

|

||||

#!/bin/bash

|

||||

PATH=$PATH:/usr/bin/:/usr/local/bin/:/usr/sbin

|

||||

dataCenterPath=$1

|

||||

|

||||

function killIt()

|

||||

{

|

||||

pid=$1

|

||||

/usr/bin/kill -9 ${pid}

|

||||

}

|

||||

|

||||

function fetch()

|

||||

{

|

||||

for file in ${dataCenterPath}/pids/base/*.pid

|

||||

do

|

||||

if [ -f "$file" ]

|

||||

then

|

||||

killIt $(cat $file) | true

|

||||

fi

|

||||

done

|

||||

}

|

||||

|

||||

function main()

|

||||

{

|

||||

fetch

|

||||

}

|

||||

|

||||

main

|

||||

20

systemdConfs/common/checkPort.service

Normal file

20

systemdConfs/common/checkPort.service

Normal file

@ -0,0 +1,20 @@

|

||||

[Unit]

|

||||

Description=restartPort

|

||||

After=network.target auditd.service

|

||||

|

||||

[Service]

|

||||

Type=simple

|

||||

User=root

|

||||

Group=root

|

||||

|

||||

StandardOutput=syslog

|

||||

StandardError=syslog

|

||||

SyslogIdentifier=checkPort

|

||||

SyslogFacility=local0

|

||||

ExecStartPre=/bin/bash -c "/home/ubuntu/data/shell/autoDeploy/checkPort.sh localhost 53 'systemctl restart dnsmasq.service'"

|

||||

ExecStart=/bin/bash -c "echo 'checkPort done'"

|

||||

|

||||

Restart=on-failure

|

||||

RestartSec=300

|

||||

TimeoutSec=255

|

||||

RestartPreventExitStatus=255

|

||||

9

systemdConfs/common/checkPort.timer

Normal file

9

systemdConfs/common/checkPort.timer

Normal file

@ -0,0 +1,9 @@

|

||||

[Unit]

|

||||

Description=checkPort mins run

|

||||

|

||||

[Timer]

|

||||

OnCalendar=*:0/3

|

||||

Persistent=true

|

||||

|

||||

[Install]

|

||||

WantedBy=timers.target

|

||||

@ -0,0 +1,22 @@

|

||||

[Unit]

|

||||

Description=checkService

|

||||

After=network.target auditd.service

|

||||

|

||||

[Service]

|

||||

Type=simple

|

||||

User=root

|

||||

Group=root

|

||||

|

||||

StandardOutput=syslog

|

||||

StandardError=syslog

|

||||

SyslogIdentifier=autoDeploy

|

||||

SyslogFacility=local0

|

||||

|

||||

WorkingDirectory=/root

|

||||

ExecStartPre=/etc/systemd/system/frontEndUpgrade.sh html.zip "/etc/nginx" "/home/admin.hq" "production/ztsjxxxt"

|

||||

ExecStartPre=/etc/systemd/system/frontEndUpgrade.sh dataCenter.zip "/etc/nginx" "/home/admin.hq" "production/dataCenter"

|

||||

ExecStartPre=/etc/systemd/system/checkService.sh ztsjxxxt.jar "/usr/local/jar" ztsjxxxt "/home/admin.hq/" "production/ztsjxxxt"

|

||||

ExecStart=/usr/bin/echo "checkService checked done"

|

||||

ExecStartPost=/usr/bin/systemctl reload nginx

|

||||

Restart=on-failure

|

||||

RestartPreventExitStatus=255

|

||||

@ -0,0 +1,26 @@

|

||||

[Unit]

|

||||

Description=kkFileView

|

||||

After=network.target sshd.service

|

||||

|

||||

[Service]

|

||||

Type=simple

|

||||

User=admin.hq

|

||||

Group=admin.hq

|

||||

|

||||

StandardOutput=syslog

|

||||

StandardError=syslog

|

||||

SyslogIdentifier=kkFileView

|

||||

SyslogFacility=local0

|

||||

|

||||

WorkingDirectory=/usr/local/fileview/kkFileView-4.0.0/bin

|

||||

EnvironmentFile=/etc/systemd/system/kkFileView.env

|

||||

ExecStart=/usr/local/jdk/jdk1.8.0_251/bin/java -Dfile.encoding=UTF-8 -Dspring.config.location=../config/application.properties -jar kkFileView-4.0.0.jar

|

||||

ExecStop=/bin/kill -- $MAINPID

|

||||

TimeoutStopSec=5

|

||||

KillMode=process

|

||||

Restart=on-failure

|

||||

RestartSec=30

|

||||

|

||||

[Install]

|

||||

Alias=kkFileView

|

||||

WantedBy=multi-user.target

|

||||

@ -0,0 +1,21 @@

|

||||

[Unit]

|

||||

Description=ossUpgrade

|

||||

After=network.target auditd.service

|

||||

|

||||

[Service]

|

||||

Type=simple

|

||||

User=admin.hq

|

||||

Group=admin.hq

|

||||

|

||||

StandardOutput=syslog

|

||||

StandardError=syslog

|

||||

SyslogIdentifier=autoDeploy

|

||||

SyslogFacility=local0

|

||||

|

||||

WorkingDirectory=/home/admin.hq

|

||||

ExecStartPre=/etc/systemd/system/ossUpgrade.sh "production/ztsjxxxt/frontEnd/" html.zip ztsjxxxt

|

||||

ExecStartPre=/etc/systemd/system/ossUpgrade.sh "production/dataCenter/frontEnd/" dataCenter.zip

|

||||

ExecStartPre=/etc/systemd/system/ossUpgrade.sh "production/ztsjxxxt/backEnd/" ztsjxxxt.jar ztsjxxxt

|

||||

ExecStart=/usr/bin/echo "ossUpgrade checked done"

|

||||

Restart=on-failure

|

||||

RestartPreventExitStatus=255

|

||||

@ -0,0 +1,27 @@

|

||||

[Unit]

|

||||

Description=ztsjxxxt

|

||||

After=network.target sshd.service

|

||||

|

||||

[Service]

|

||||

Type=simple

|

||||

User=admin.hq

|

||||

Group=admin.hq

|

||||

|

||||

StandardOutput=syslog

|

||||

StandardError=syslog

|

||||

SyslogIdentifier=backEnd

|

||||

SyslogFacility=local0

|

||||

|

||||

WorkingDirectory=/usr/local/jar

|

||||

EnvironmentFile=/etc/systemd/system/javaenv.env

|

||||

ExecStartPre=/etc/systemd/system/backEndUpgrade.sh ztsjxxxt.jar "/usr/local/jar"

|

||||

ExecStart=/usr/local/jdk/jdk1.8.0_251/bin/java -jar ztsjxxxt.jar

|

||||

ExecStop=/bin/kill -- $MAINPID

|

||||

TimeoutStopSec=5

|

||||

KillMode=process

|

||||

Restart=on-failure

|

||||

RestartSec=30

|

||||

|

||||

[Install]

|

||||

Alias=ztsjxxxt

|

||||

WantedBy=multi-user.target

|

||||

@ -0,0 +1,39 @@

|

||||

[Unit]

|

||||

Description=checkService

|

||||

After=network.target auditd.service

|

||||

|

||||

[Service]

|

||||

Type=simple

|

||||

User=root

|

||||

Group=root

|

||||

|

||||

StandardOutput=syslog

|

||||

StandardError=syslog

|

||||

SyslogIdentifier=autoDeploy

|

||||

SyslogFacility=local0

|

||||

|

||||

WorkingDirectory=/home/admin.hq

|

||||

ExecStartPre=/home/admin.hq/data/shell/autoDeploy/frontEndUpgrade.sh dataCenter.zip "/var/www" "/tmp/autoDeploy" "ztupload/production/dataCenter"

|

||||

#ExecStartPre=/home/admin.hq/data/shell/autoDeploy/checkService.sh data-collection.jar "/application/backend/data_center/jars" dataCenter "/tmp/autoDeploy" "ztupload/production/dataCenter"

|

||||

ExecStartPre=/home/admin.hq/data/shell/autoDeploy/checkService.sh data-metadata-collect-fileset.jar "/application/backend/data_center/jars" dataCenter "/tmp/autoDeploy" "ztupload/production/

|

||||

dataCenter"

|

||||

ExecStartPre=/home/admin.hq/data/shell/autoDeploy/checkService.sh data-metadata-collect.jar "/application/backend/data_center/jars" dataCenter "/tmp/autoDeploy" "ztupload/production/dataCent

|

||||

er"

|

||||

ExecStartPre=/home/admin.hq/data/shell/autoDeploy/checkService.sh data-metadata-group.jar "/application/backend/data_center/jars" dataCenter "/tmp/autoDeploy" "ztupload/production/dataCenter

|

||||

"

|

||||

ExecStartPre=/home/admin.hq/data/shell/autoDeploy/checkService.sh data-metadata-mapping.jar "/application/backend/data_center/jars" dataCenter "/tmp/autoDeploy" "ztupload/production/dataCent

|

||||

er"

|

||||

ExecStartPre=/home/admin.hq/data/shell/autoDeploy/checkService.sh data-metadata-model.jar "/application/backend/data_center/jars" dataCenter "/tmp/autoDeploy" "ztupload/production/dataCenter

|

||||

"

|

||||

ExecStartPre=/home/admin.hq/data/shell/autoDeploy/checkService.sh data-sources.jar "/application/backend/data_center/jars" dataCenter "/tmp/autoDeploy" "ztupload/production/dataCenter"

|

||||

ExecStartPre=/home/admin.hq/data/shell/autoDeploy/checkService.sh data-standard.jar "/application/backend/data_center/jars" dataCenter "/tmp/autoDeploy" "ztupload/production/dataCenter"

|

||||

ExecStartPre=/home/admin.hq/data/shell/autoDeploy/checkService.sh data-metadata-interface.jar "/application/backend/data_center/jars" dataCenter "/tmp/autoDeploy" "ztupload/production/dataCe

|

||||

nter"

|

||||

#ExecStartPre=/home/admin.hq/data/shell/autoDeploy/checkService.sh data-asset.jar "/application/backend/data_center/jars" dataCenter "/tmp/autoDeploy" "ztupload/production/dataCenter"

|

||||

ExecStartPre=/home/admin.hq/data/shell/autoDeploy/checkService.sh data-metadata-dic.jar "/application/backend/data_center/jars" dataCenter "/tmp/autoDeploy" "ztupload/production/dataCenter"

|

||||

ExecStart=/usr/bin/echo "checkService checked done"

|

||||

ExecStartPost=/usr/bin/systemctl reload nginx

|

||||

Restart=on-failure

|

||||

RestartSec=10s

|

||||

TimeoutSec=500s

|

||||

RestartPreventExitStatus=255

|

||||

@ -0,0 +1,39 @@

|

||||

[Unit]

|

||||

Description=dataCenter

|

||||

After=network.target sshd.service

|

||||

|

||||

[Service]

|

||||

Type=forking

|

||||

User=admin.hq

|

||||

Group=admin.hq

|

||||

|

||||

StandardOutput=syslog

|

||||

StandardError=syslog

|

||||

SyslogIdentifier=backEnd

|

||||

SyslogFacility=local0

|

||||

|

||||

WorkingDirectory=/application/backend/data_center/

|

||||

EnvironmentFile=/home/admin.hq/data/shell/autoDeploy/nacos.env

|

||||

Environment="JAVA_HOME=/usr/local/jdk1.8.0_161/" "workPath=/application/backend/data_center/"

|

||||

ExecStartPre=/usr/bin/sleep 15

|

||||

#ExecStartPre=/home/admin.hq/data/shell/autoDeploy/backEndUpgrade.sh data-collection.jar "${workPath}jars"

|

||||

ExecStartPre=/home/admin.hq/data/shell/autoDeploy/backEndUpgrade.sh data-metadata-collect-fileset.jar "${workPath}jars"

|

||||

ExecStartPre=/home/admin.hq/data/shell/autoDeploy/backEndUpgrade.sh data-metadata-collect.jar "${workPath}jars"

|

||||

ExecStartPre=/home/admin.hq/data/shell/autoDeploy/backEndUpgrade.sh data-metadata-group.jar "${workPath}jars"

|

||||

ExecStartPre=/home/admin.hq/data/shell/autoDeploy/backEndUpgrade.sh data-metadata-mapping.jar "${workPath}jars"

|

||||

ExecStartPre=/home/admin.hq/data/shell/autoDeploy/backEndUpgrade.sh data-metadata-model.jar "${workPath}jars"

|

||||

ExecStartPre=/home/admin.hq/data/shell/autoDeploy/backEndUpgrade.sh data-sources.jar "${workPath}jars"

|

||||

ExecStartPre=/home/admin.hq/data/shell/autoDeploy/backEndUpgrade.sh data-standard.jar "${workPath}jars"

|

||||

ExecStartPre=/home/admin.hq/data/shell/autoDeploy/backEndUpgrade.sh data-metadata-interface.jar "${workPath}jars"

|

||||

#ExecStartPre=/home/admin.hq/data/shell/autoDeploy/backEndUpgrade.sh data-asset.jar "${workPath}jars"

|

||||